Saturday, Sept 21 2024

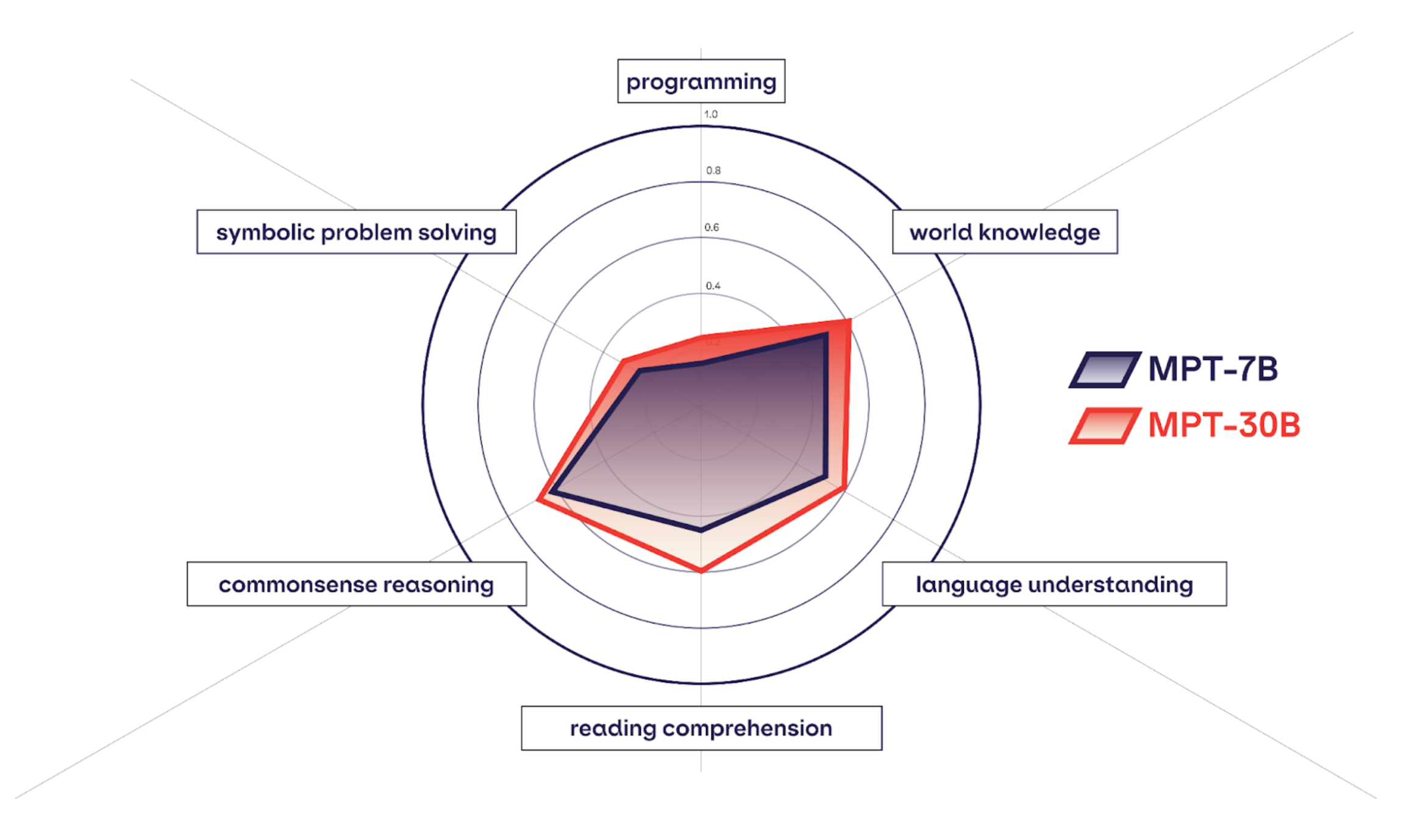

MPT-30B: Raising the bar for open-source foundation models

.png)

By A Mystery Man Writer

MPT-30B Open-Source LLM from MosaicML!

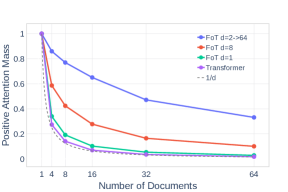

2307.03170] Focused Transformer: Contrastive Training for Context Scaling

Guide Of All Open Sourced Large Language Models(LLMs), by Luv Bansal

.png)

Train Faster & Cheaper on AWS with MosaicML Composer

PDF) A Review of Transformer Models

MPT-30B: MosaicML Outshines GPT-3 With A New LLM To Push The

12 Open Source LLMs to Watch

MPT-30B: Raising the bar for open-source, commercially available

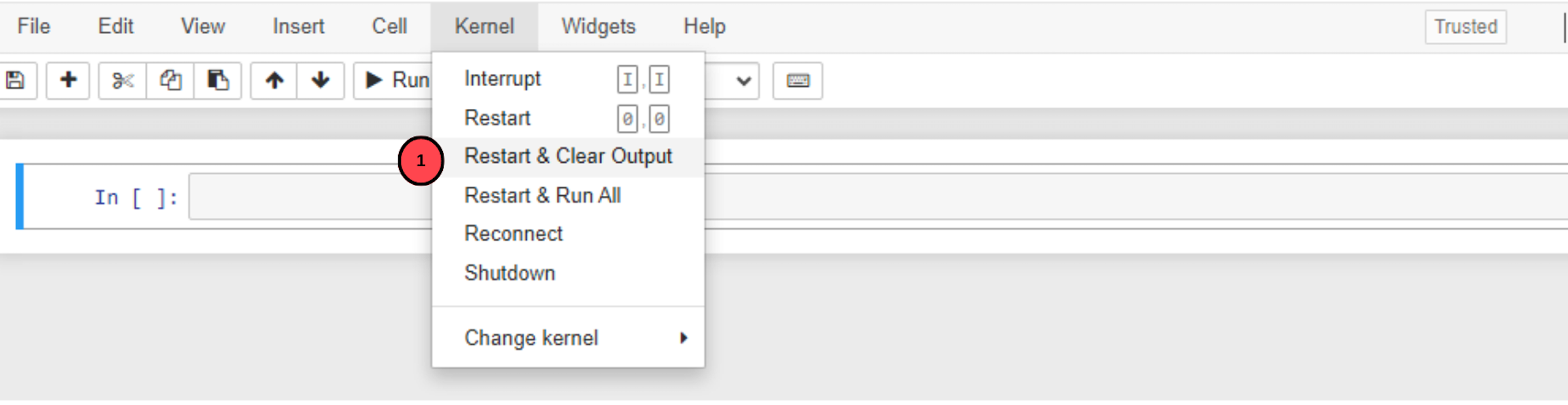

How to Use MosaicML MPT Large Language Model on Vultr Cloud GPU

The History of Open-Source LLMs: Better Base Models (Part Two

Applied Sciences October-2 2023 - Browse Articles

.png)

Train Faster & Cheaper on AWS with MosaicML Composer

BAH-ML-ASC/MPT-30B-Instruct · Hugging Face

Related searches

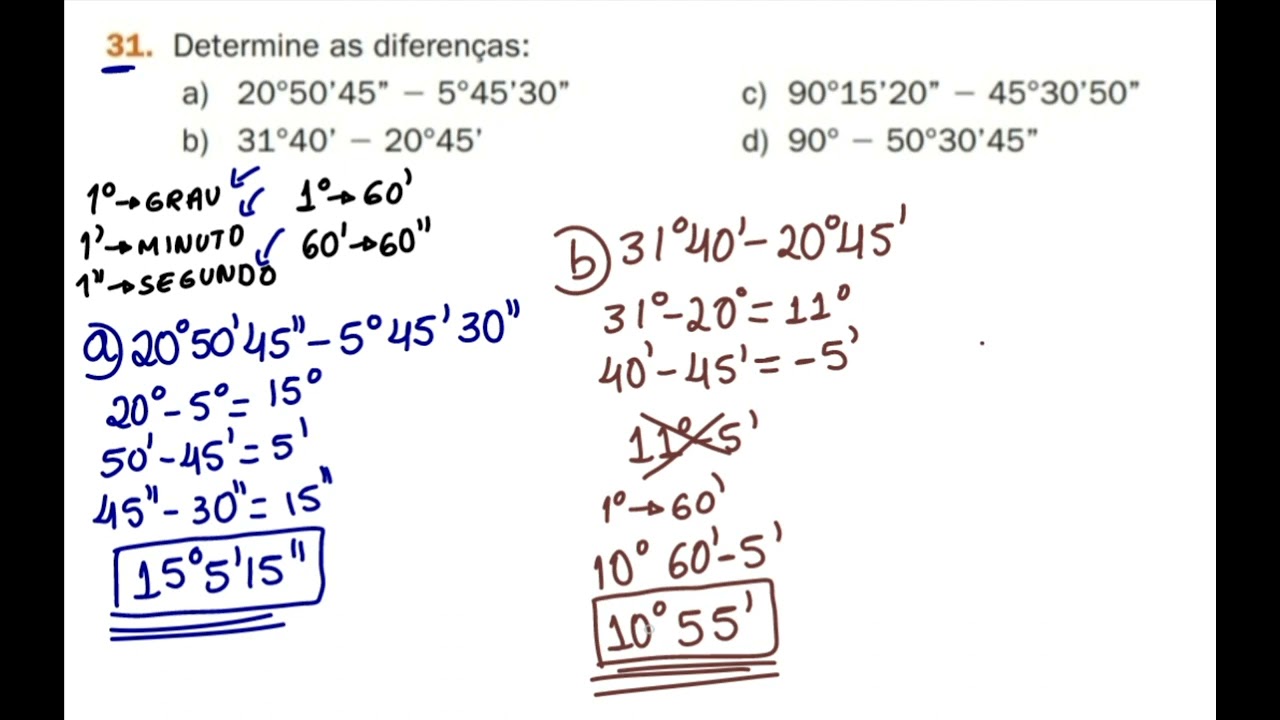

- 31- Determine as diferenças a)20°50'45'' - 5°45'30'' b)31°40' - 20° 45' c)90°15'20''-45°30'50''

- TORNO MECÂNICO ROMI TORMAX 30B - 650MM X 2200MM - REVISADO

- Pill Finder: 30 B Brown Round

- Rat mammary tumor images. Tumor size (mm) (A) DMBA group (n = 4

- Torno Mecânico Romi Tormax 30B REFORMADO – Megafer Máquinas

©2016-2024, changhanna.com, Inc. or its affiliates