DeepSpeed Compression: A composable library for extreme

By A Mystery Man Writer

Large-scale models are revolutionizing deep learning and AI research, driving major improvements in language understanding, generating creative texts, multi-lingual translation and many more. But despite their remarkable capabilities, the models’ large size creates latency and cost constraints that hinder the deployment of applications on top of them. In particular, increased inference time and memory consumption […]

Michel LAPLANE (@MichelLAPLANE) / X

GitHub - microsoft/DeepSpeed: DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

如何评价微软开源的分布式训练框架deepspeed? - 菩提树的回答- 知乎

DeepSpeed download

JAX: Accelerating Machine-Learning Research with Composable Function Transformations in Python

This AI newsletter is all you need #6, by Towards AI Editorial Team

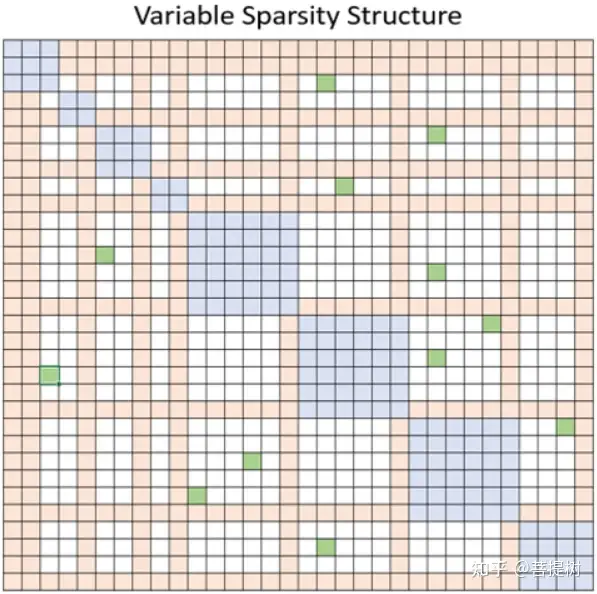

Optimization approaches for Transformers [Part 2]

ZeroQuant与SmoothQuant量化总结-CSDN博客

如何评价微软开源的分布式训练框架deepspeed? - 知乎

Gioele Crispo on LinkedIn: Discover ChatGPT by asking it: Advantages, Disadvantages and Secrets

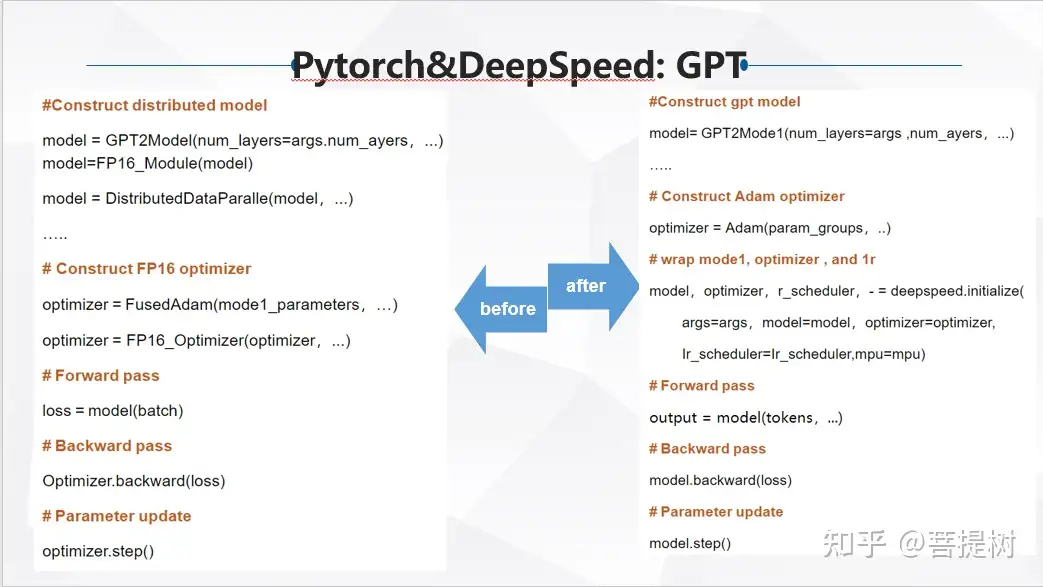

DeepSpeed介绍- 知乎

GitHub - microsoft/DeepSpeed: DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

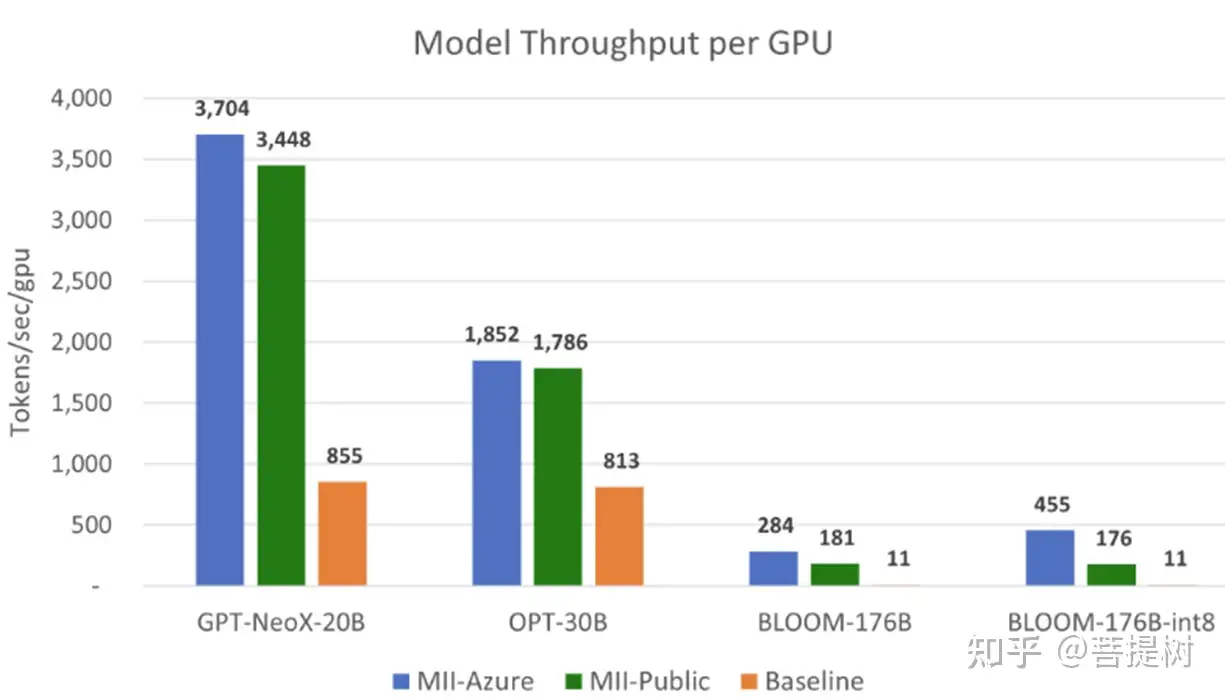

Your Daily AI Research tl;dr - 2022-07-27 🧠

DeepSpeed介绍- 知乎

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

- Long Sleeve Spandex Compression Base Layer in Black, Gray, and White

- Orion Motor Tech Compression Tester, 8PCS Engine Cylinder Pressure Gauge for Petrol Gas Engine, 0-300PSI Engine Compression Tester Automotive with

- Airback” Backpack with Built-in Compression Tech [Kickstarter] — Tools and Toys

- High-tech compression shorts maker Strive aims to measure the 'miles per gallon' of athletes – GeekWire

- Dovava Dri-tech Compression Crew Socks 15-20mmHg for Men & Women, Athletic Fit Running Nurses Flight Travel Pregnancy Edema Diabetic, Boost Ankle Calf Circulation, Small-Medium, Black (4 Pairs) : Clothing, Shoes