RedPajama replicates LLaMA dataset to build open source, state-of-the-art LLMs

By A Mystery Man Writer

RedPajama, which creates fully open-source large language models, has released a 1.2 trillion token dataset following the LLaMA recipe.

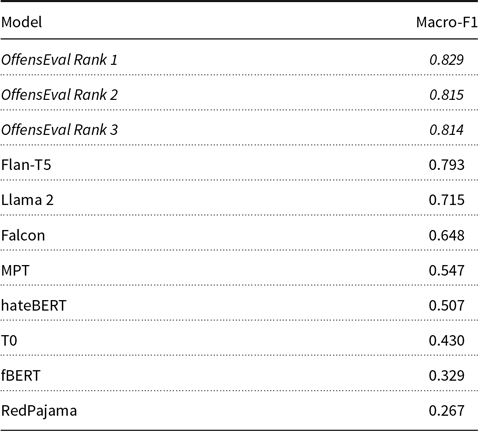

OffensEval 2023: Offensive language identification in the age of Large Language Models, Natural Language Engineering

Timeline of computing 2020–present - Wikipedia

RedPajama - Llama is getting Open Source!

The data that trains AI is under the spotlight — and even I'm weirded out

What is RedPajama? - by Michael Spencer

Cloud Intelligence at the speed of 5000 tok/s - with Ce Zhang and Vipul Ved Prakash of Together AI

Why LLaMA-2 is such a Big Deal

Why LLaMA-2 is such a Big Deal

1. LLM Ingredients: Training Data - Designing Large Language Model Applications [Book]

static.premai.io/book/models_codellama-scores.png

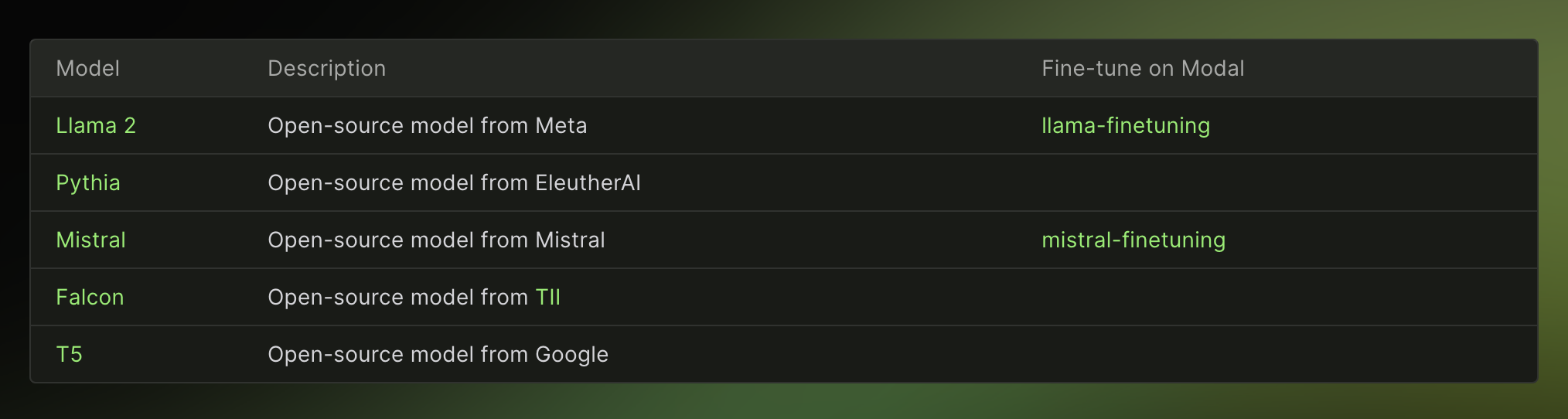

Best Open Source LLMs of 2024 — Klu

static./static/2023/weird-world-l

From ChatGPT to LLaMA to RedPajama: I'm Switching My Interest to Open-Source Language Models, by Yeyu Huang

RAG Is A Hack - with Jerry Liu from LlamaIndex – Latent Space: The AI Engineer Podcast — Practitioners talking LLMs, CodeGen, Agents, Multimodality, AI UX, GPU Infra and all things Software 3.0

今日気になったAI系のニュース【23/4/24】|shanda

- Little Boy's Classic Pajama Set - Kid's Jammies – Little English

- Red Pajama

- HOT PILLXIOWGEWRH 601] Red Pajamas Sets Women Nightwear Pajamas Fashion Female Solid Color Long Sleeve Blouse Pants Set Sleepwear Long Sleeve Pajamas

- Red Pajama 2: The Public Dataset With a Whopping 30 Trillion Tokens

- Book Series Review: Llama Llama Red Pajama by Anna Dewdney

- Hip-hop vs. street dance: Which one is for you?

- shapermint.uk Reviews Read Customer Service Reviews of shapermint.uk

- Carole Martin Full-Freedom Comfort Front Closure Bra for Women

- One Dri-fit Camo Mid-rise Tight - Medium Olive-White – Carbon38

- Principal Low-Impact Bra Active wear for women, 2 piece outfits