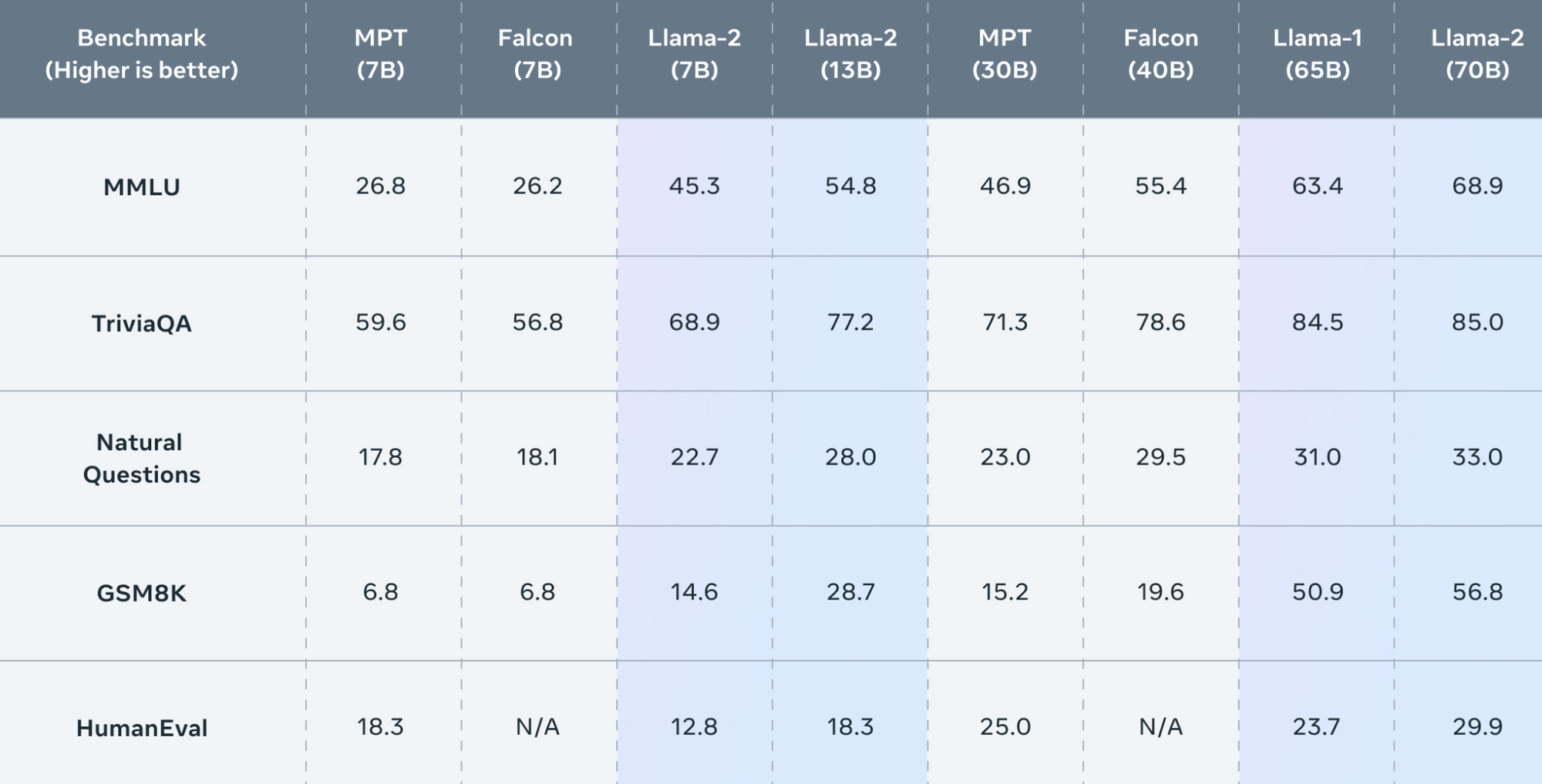

MPT-30B: Raising the bar for open-source foundation models

By A Mystery Man Writer

Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

Benchmarking and Defending Against Indirect Prompt Injection Attacks on Large Language Models

Can large language models reason about medical questions? - ScienceDirect

MPT-30B: Raising the bar for open-source foundation models : r/LocalLLaMA

12 Open Source LLMs to Watch

12 Open Source LLMs to Watch

MosaicML Releases Open-Source MPT-30B LLMs, Trained on H100s to Power Generative AI Applications

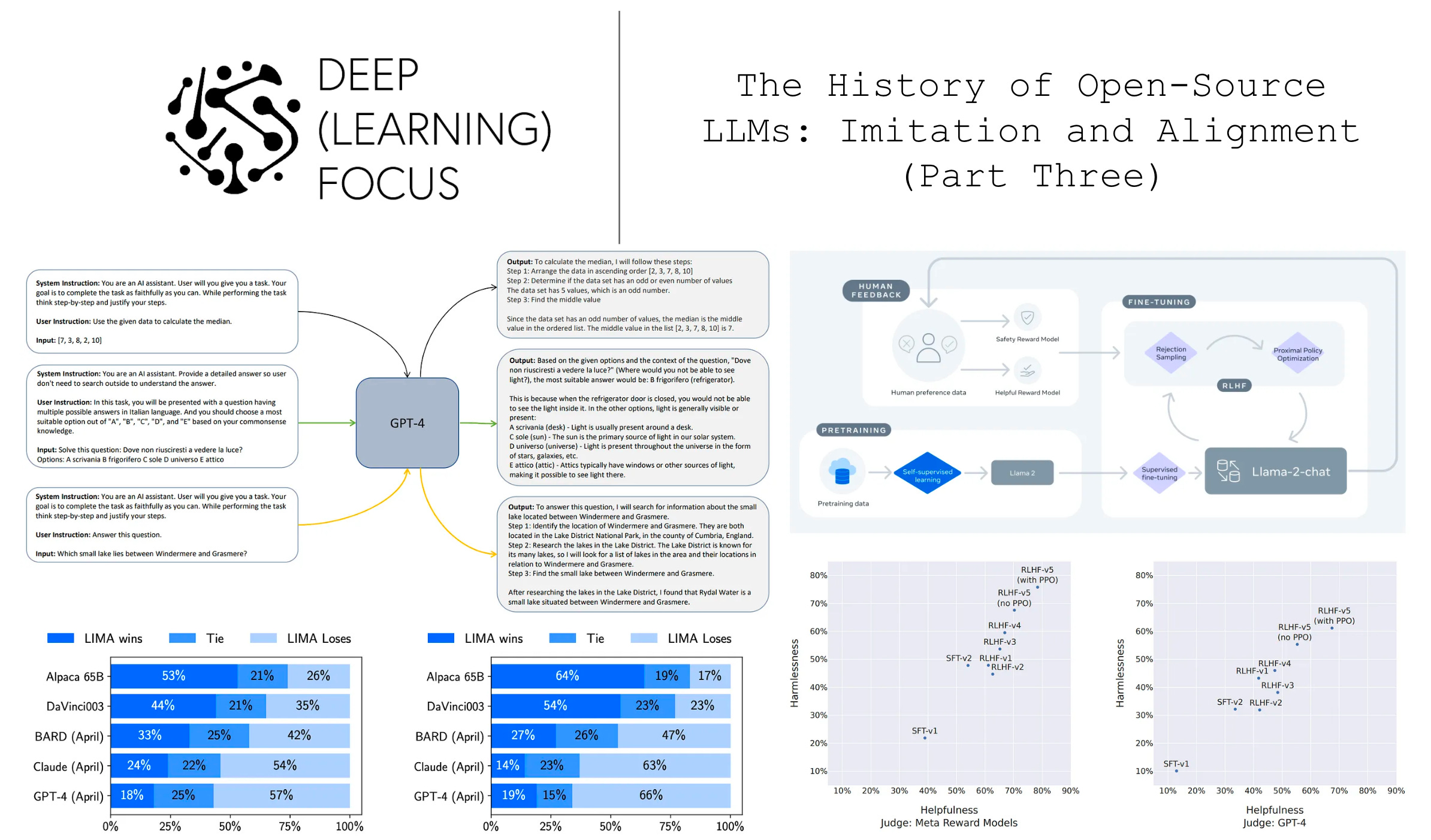

The History of Open-Source LLMs: Imitation and Alignment (Part Three)

The History of Open-Source LLMs: Better Base Models (Part Two), by Cameron R. Wolfe, Ph.D.

Mosaic ML's BIGGEST Commercially OPEN Model is here!

:max_bytes(150000):strip_icc()/sec-form-n-30b-2.asp-final-1f83fdea8b41443fb13311bd01d25c51.png)